Test-Driven vs Test-First

I have observed a lot of confusion out there about the term Test-Driven Development (or TDD). Let me demonstrate with a paraphrased comment from a former colleague: I tried Test-Driven Development once, and it was a disaster, and a huge waste of time. I spent a week writing all the test cases, then when I got to writing the code, I realized most of my test cases weren’t right, and had to be rewritten anyway.

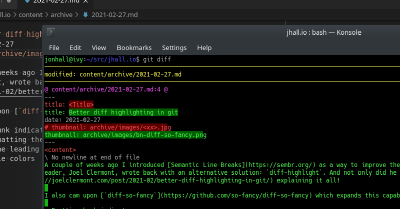

Better diff highlighting in git

A couple of weeks ago I introduced Semantic Line Breaks as a way to improve the readability of richtext formats, like Markdown. One reader, Joel Clermont, wrote back with an alternative solution: diff-highlight. And not only did he write to tell me about it, but he wrote a post explaining it all! I also came upon diff-so-fancy which expands this capability even more, optionally providing: Prettier hunk indicators pretty-formatting the diff headers for each file Removing the leading + or - sign from each line, which are redundant when using colors customizable colors and more

The one valid use for a code coverage percentage

After reeling against using a test code coverage percentage to measure the value of your tests, let me offer one small exception. There is one way in which I find test coverage to be useful: comparing the delta of test coverage against expectations. Strictly speaking, this doesn’t need to be a measure of coverage percentage. It can be a report of raw lines (or branches, or statements) covered. The idea is: I don’t generally expect this number to go drastically down (or for that matter, up).

Properties of good unit tests

I’ve said calculating code coverage is overrated. But I’m also a strong believer in good testing. So what makes for good tests? This is a nonexhaustive list of some charactaristics I look for when writing tests, or reviewing tests others have written. What would you add? Independent. A test should work by itself, or when executed with others. Tests that depend on state configured by previous tests are broken. Deterministic. Obviously a test that has a non-deterministic result is not a good test.

Complete code coverage

Yesterday I said that code coverage percentages are overrated. But there’s something in the concept of “code coverage” that gets at something valuable. Often when asked what percentage of code coverage I aim for, I respond with “Complete code coverage.” Which is not the same as 100%. If I were forced to define my concept of complete code coverage, it would probably go something like this: Every meaningful input condition is tested, under every meaningful state.

Code coverage percentage is overrated

“Percent code coverage” seems to get a lot of attention. A common question, anywhere programmers congregate, seems to be “What’s the ideal test coverage percentage?” Some say 80%. Some “purists” say 100%. What’s the right answer? Well, what do these numbers represent? Typically (depending on the exact language/tooling you’re using) it represents the percentage of lines, conditional branches, or statements executed during the execution of a test suite. In theory, proponents say, 100% test coverage would mean that you’ve tested 100% of possible execution paths.

A randomly failing test is a failing test

You’ve just spent several hours building an awesome new feature for your application. You wrote automated tests for it. You manually tested everything in the staging environment. You even asked your colleague to stress-test it for you, and they couldn’t make it crash. Perfect! Now you push it up to your version control system, and… A test fails! Not one of your new tests. An old test. Dagnabbit! You must have introduced a regression somewhere along the line.

Subscribe to the Daily Commit

Every day I write about improving software delivery at small companies like yours. Don't miss out! I will respect your inbox, and honor my privacy policy.Unsure? Browse the archive.

The efficiency of creativity

Software development is, in some sense, all about efficiency. Except when computers are used for entertainment (gaming, for example), pretty much their entire reason for existence is to make certain tasks more efficient. When we write software, we’re generally doing so with the purpose of automating, or simplifying some task that in some way, a human, or other less-efficient machine might be doing. Certain types of developers dedicate large parts of their careers to making the development of software more efficient.

Tip of the day: Semantic Line Breaks

I recently stumbled upon a usability tip for editing certain rich text formats, such as Markdown. Have you ever been bothered by documentation diffs that look something like this? Enter SemBr, the Semantic Line Breaks Specification, which provides a formal spec. But the TL;DR; version is: When using rich text formats that allow it, press enter after every significant punctuation mark. The end result:

Making a mockery of mocking

Unit testing and TDD are all the rage. This is a good thing! Not long ago, most conversations I had about testing turned into a battle of philosophy about whether testing should happen. Nowadays, it seems that battle has been largely won. But as soon as you solve the big problem, the smaller problems start to show up. And that brings me to today’s “small problem”: The mockery that is “mocking”.

You're probably using the wrong definition of Continuous Integration

Until recently, I was using the wrong definition of Continuous Integration. You probably are, too. This is a bit of an embarassing mistake, at least for me, because I’ve heard the proper definition many times. And I talk about, and advocate for, CI, all the time. If you had asked me, I probably would have said something like “Automatically test your code, before it’s merged.” Interestingly, the simplest definition of continuous integration says nothing about testing—automatic or otherwise.