The one valid use for a code coverage percentage

After reeling against using a test code coverage percentage to measure the value of your tests, let me offer one small exception.

There is one way in which I find test coverage to be useful: comparing the delta of test coverage against expectations.

Strictly speaking, this doesn’t need to be a measure of coverage percentage. It can be a report of raw lines (or branches, or statements) covered.

The idea is: I don’t generally expect this number to go drastically down (or for that matter, up). If I see a large change in this number, it’s probably an indication that I’ve made a mistake, such as not committing some tests I’ve written, or accidentally disabling tests.

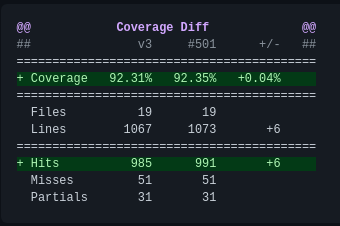

In this example (generated by codecov.io), I see that I’ve added 6 lines of code, and that I’ve added 6 lines of test coverage. No surprises here. Assuming I wrote the new tests meaningfully, we’re golden!

The risk with adding a tool like this to your test pipeline, is that there then becomes a temptation to use these test coverage percentages the wrong way, and start aiming for an arbitrary percentage again, instead of focusing on meaningful, complete test coverage. For this reason, I only introduce these sorts of tools to teams well after they have learned to stop talking about code coverage percentages.