When is 95% test coverage worse than 5%

Test coverage for its own sake is dangerous. It leads to foolish technical choices.Test coverage. What a hot topic.

What’s the ideal percentage?

100% maybe?

Maybe that’s unrealistic. How about 80%?

Here’s the part where you expect me to say “It depends”, right?

I’m not gonna.

Instead, I’ll tell you a story.

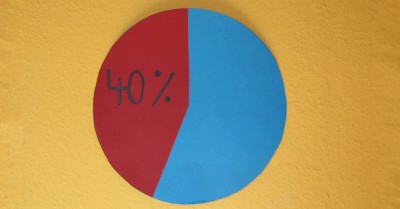

Bob joined a project that had a measly 5% test coverage. He recognized that this was a really low number, so he spent a three months refactoring the code to add tests. He had no other goal in mind except to get the code testable, and under test. A laudible goal, surely.

At the end, he had indeed achieved his 95% test coverage goal. And they were all REALLY GOOD tests. Not assertion-less tests that just executed code, or any other shennanigans. They were GOOD tests.

The problem? He broke production two dozen times in the process. For example, when he added tests to the inventory component, he unwittingly broke the checkout component, which had been reading some global state from the inventory component. Since the checkout component wasn’t yet under test, this problem wasn’t discovered until it was in production, and customers had to call customer service to complain.

In a parallel universe, Alice was hired instead of Bob.

She also noticed that the 5% test coverage seemed really low, but she took a different approach. She recognized that although 95% of the code wasn’t covered by formal tests, it was effectively tested by virtue of the fact that customers were happily using it. So rather than a large scale refactoring, she convinced the team to adopt a simple policy: Henceforth, all behavior changes will be accompanied by tests.

Three months later, the project had reached 15% test coverage, compared to Bob’s 95%. But here’s the beautiful thing: Production didn’t go down a single time as the result of adding tests.

Not only that, but the 10% of tests that were added were of much higher strategic value than Bob’s, since they were testing volatile portions of the code.

The moral of the story?

Test coverage for its own sake is dangerous. It leads to foolish technical choices.

If you can achieve 95% test coverage naturally and organically, that’s great. If you have to force it, you’re likely to introduce more pain than you’re saving.

In case you missed it, Bryan Finster and I talked about this, among other things, in a recent episode of the Tiny DevOps podcast.